The Modern Data Platform – challenge 3 – SLOW PERFORMANCE. Part Two

The Modern Data Platform – Challenge 3…

Following on from part one of previous blog I will now continue to go through challenge three of SLOW PERFORMANCE beginning with Data Consumption.

FRONTEND (DATA CONSUMPTION)

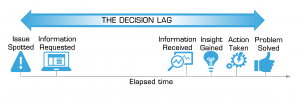

Before getting into the detail, I want to introduce the concept of Decision Lag.

“The decision lag is the period between the time when the need for action is recognised and the time when action is taken.”

https://www.britannica.com/topic/decision-lag

In data warehousing and business intelligence terms; the elapsed time between someone realising they need data to support a decision and when they actually receive that data in a format they can consume.

Using our restaurant analogy; the period between when you decide what you want to eat, to when you put it in your mouth! Discussion about Decision Lag also introduces an interesting behavioural concept. The majority of complaints are associated with the perceived elapsed time between the user requesting and receiving the information. Under closer inspection, it is typical to find large amounts of time being wasted identifying the information required and then translating it in to the relevant action. Users however are unlikely to complain about this as it is more likely a reflection on their own analytical and decision making abilities! Solving this particular issue is much more about educating your users to be more analytical and BI savvy. A strong Business Intelligence competency centre should be driving this increase in business user analytical maturity. However, regardless of the bias described above it is extremely common to find scenarios where long running reports and queries, or an inability to run requests on demand, point to underlying performance issues impacting the ability to produce data that is ready to be consumed.

Again, we can typically group all of these performance issues into one of two categories.

- Query execution – the time it takes to get the data back to the BI platform / tool.

- Information Presentation – the time it takes for the data to be converted into and presented as usable information.

The explosive growth of the app economy and its accelerating effect on the consumerisation of IT has had a profound impact on the expectations placed on our humble data warehouse. Users are no longer prepared to wait to get the data they need in the format they want it, and above and beyond all this they expect it to be simple to consume.

Query Execution

As previously mentioned Query Execution time is the most common performance problem that we hear complaints for, or are asked to help with. Increasing data volumes, multiple data sets and more complex analytical requirements all lead to requests to execute increasingly complex, and as a result, longer running queries.

In the early days of Data Warehousing we were predominantly focused on preparing data that ended up in flat reports that were generally scheduled, distributed and consumed on daily, weekly and monthly cycles. Those days are gone. The modern set of use cases are not as batch oriented, and as a result far less predictable. Something which has a certain irony when you consider that many of the new use cases are related to the rise in popularity of predictive analytics. The traditional performance optimisation activities such as pre-aggregation, indexing, faster disks, more cores, and OLAP servers will only get you so far. Particularly when you start being asked to support predictive models running over terabytes of unstructured log data that in themselves are being topped up in real-time.

We are in short expecting the restaurant to prepare a complex haute cuisine dish in the time it takes to get a McDonalds.

Information Presentation

The focus of this blog series is of course the challenges associated with the traditional data warehouse. It is however, impossible to talk about performance without considering the role that is played by the architectural layer that sits between the data warehouse and the decision maker. The Business Intelligence platform or tool.

It would be tempting to author an additional series of blogs discussing the trials and tribulations associated with eking out the best information delivery performance. Perhaps another day. In the interim, I will say this. Psychologically, the front end tool has a disproportionate influence on opinion so it is incredibly important to think beyond the data warehouse when considering performance.

It is also true that one poorly formed query from a loosely governed font end tool can wreak havoc on your data warehouse. With that in mind, here are the a few questions to consider in relation to the performance relationship between your data warehouse and front end BI tools:

- Do you know and understand all of the tools that are in use against your data warehouse?

- Do you genuinely understand from a business perspective the value of what people are trying to do with the data they get from the warehouse?

- Have you checked and benchmarked the SQL (or MDX) that the tools generate to see if it can be improved?

- Can you provide native connections to all the tools you have to support?

- Can your data warehouse provide open standard based connectivity for programmatic data access and interfacing?

- How much of their workload do your tools offload to the database?

- Does your data warehouse provide asynchronous connections?

- Where will data caching (if available) take place?

- Does your warehouse support in-database processing to improve performance?

- If you provide mobile access, where does the data processing happen?

The bottom line is this, the technical requirements and performance expectations placed on a typical data warehouse have increased exponentially over recent years and many are struggling to keep up.

So what’s the answer?

Is it possible to have a data warehouse that can speed the ingestion of traditional transactional sources as well as being able to load real time streaming and unstructured data without grinding to a halt?

Can you have a data platform that is able to scale and leverage modern, high power (yet still commodity) hardware to accelerate the preparation of the data so that it can be made available for querying as soon as possible?

Is there a way to evolve your data warehouse in order to adopt new innovative technologies that have become available in recent times, to support new business workloads such as Predictive and Advanced Analytics?

Would it be possible to replace your existing aging, and frankly struggling data warehouse, without facing prohibitive costs or high barriers to accessing new technical skills?

The answer is, yes (of course).

The Modern Data Platform

The Modern Data Platform delivers on all the requirements for a next generation data warehouse. Enabling organisations to radically simplify their existing legacy or overly complex solutions in order to lower running costs, improve agility and gain breakthrough performance to deliver real business value.

Remember to follow the rest of the posts in this blog series where we will explore in detail the 2 remaining common challenges of traditional data warehousing.

To find out how the Modern Data Platform addresses the challenges of SLOW PERFORMANCE download our webinar recording